A primary standard is a highly pure reagent with a large molecular weight that can be easily weighed and used to initiate a chemical reaction with another component.

To standardize an analytical method we use standards that contain known amounts of analyte. Standardization is a technique in titration for establishing the exact concentration of a prepared solution by using the standard solution in the form of reference. The precision of standardization relies on the reagents and glassware used to prepare the standards. Standard solutions are made with standard components and have precisely determined concentrations.

Standards are a substance that contains a known concentration of a drug and can be used to determine unknown amounts or calibrate analytical instruments. Analytical standards can be divided into two types, primary standards, and secondary standards.

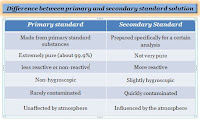

A primary standard is a highly pure reagent with a large molecular weight that can be easily weighed and used to initiate a chemical reaction with another component, while a secondary standard is a material whose active agent content was determined by comparing it to a primary standard.

What is primary standard in chemistry?

A primary standard in chemistry is an extremely pure reagent (99.9% accurate), easily weighed, and gives an indication of the number of moles in a compound. A reagent is a chemical that is used to initiate a chemical reaction between two or more substances. Reagents are frequently used to determine the presence or amount of specific chemicals in a sample solution.

Primary standards are substances that do not react with the components of the air in the open state and retain their structure for a long time. They are extremely pure and stable, with specific chemical and physical properties.

Primary standards are commonly used in experiments of titration and other analytical chemistry techniques to determine the unknown concentration of solute. Titration is a technique that involves adding a small amount of reagent to a solution until a chemical reaction happens (endpoint or equivalence point). The reaction confirms that the sample solution is at a certain concentration. There are four types of titrations: acid-base, redox, precipitation, and complexometric titration.

Examples of the primary standard:

- Potassium dichromate (K2Cr2O7) and sodium carbonate (Na2CO3), and potassium hydrogen phthalate (KHP)

- In an acetic acid solution, potassium hydrogen phthalate can be used to standardize perchloric acid and an aqueous base.

- The primary standard for silver nitrate (AgNO3) reactions is sodium chloride (NaCl).

- Potassium dichromate (K2Cr2O7) is a primary standard for redox titrations

- Sodium carbonate (Na2CO3) is a primary standard for the titration of acids

- Potassium hydrogen iodate KH (IO3)2 is a primary standard for the titration of bases

Why are primary standards used in chemistry?

- A primary standard is a measurement used in the calibration of work standards. Because of its accuracy and stability when exposed to other compounds, a primary standard is chosen. Primary parameters can be measured as a metric, such as a length, mass, or time.

- In analytical chemistry, it is commonly used. as, a reagent that is easy to weigh, a higher equivalent weight is selected, is pure and is not likely to change in weight when exposed to humid conditions, and has low reactivity with other chemicals.

- The use of a primary standard ensures that the concentration of the unknown solution is accurate. Because of a process error, the degree of trust in the concentration is slightly lower. However, for some substances, this method of standardization is the most reliable technique to obtain a consistent concentration measurement.

- Primary standards are generally used to make standard solutions that have an exactly known concentration.

Properties of primary standards:

- They are unaffected by atmospheric oxygen

- They have known molecular weight and method

- They are the dominant reactants

- They are usually chemicals with a large molecular weight

- Over a long period, they have a constant concentration/uniform composition

The following are the characteristics of a good primary standard that provide more advantages:

- It has a high degree of purity

- Has a non-toxic

- It has low reactivity and high stability

- Is affordable and easily available

- It has a high equivalent weight

- It has a high solubility

- In humid vs dry environments, it is unlikely to absorb moisture from the air to lessen mass fluctuations.

In practical, some compounds used as primary standards achieve all of these requirements, although great purity is essential. Furthermore, a compound that is an excellent primary standard for one analysis may not be the best choice for another.

What is a primary standard solution?

A solution composed of primary standard compounds is known as a primary standard solution. A primary standard is a high purity (99.9%) material that can be dissolved in a known volume of solvent to form a primary standard solution. Zinc powder can be used to standardize EDTA solutions, after being dissolved in sulfuric acid (H2SO4), or hydrochloric (HCl) is an example of a primary standard solution.

Frequently Asked Questions (FAQ):

What is a secondary standard?

A substance that has been standardized against a primary standard for use in a specific analysis is called a secondary standard. To calibrate analytical methods, secondary standards are often used. Sodium hydroxide (NaOH) is often employed as a secondary standard after its concentration has been confirmed using the primary standard.

Why is the use of a primary standard solution important?

Primary standards are necessary to determine unknown concentrations or to prepare working standards in titrations.

What is the difference between primary and secondary standard?

A primary standard is a reagent that can be easily weighed and is representative of the number of a substance contains, while a secondary standard is a substance that has been standardized against a primary standard for use in a particular analysis.

References:

- Primary Standard Substance - QS Study. https://qsstudy.com/chemistry/primary-standard-substance.

- Wikipedia contributors. "Primary standard." Wikipedia, The Free Encyclopedia. Wikipedia, The Free Encyclopedia, 26 Oct. 2021.

- Helmenstine, Ph.D. Anne Marie. “Learn About Primary and Secondary Standards in Chemistry.”

- Skoog, Douglas A., Donald M. West and F. James Holler. "Fundamentals of Analytical Chemistry 8th ed." Harcourt Brace College Publishers.